Imagine human language as a vast city. Every word is like a house, standing alone, holding meaning that seems obvious to us. But to a machine, these houses look identical from the outside. The challenge of NLP embeddings is to let machines walk inside, understand context, observe relationships, and learn how meaning shifts with company. Instead of treating words as isolated symbols, embeddings help models feel the temperature of a sentence, the tone of a phrase, and the subtle shade of intention hidden between syllables. Many learners begin this journey through structured training programs, sometimes even in programs such as AI course in Pune, where they encounter how embeddings unlock fluent communication between humans and machines.

To understand embeddings, we explore the evolution of how NLP has moved from static meanings to dynamic, context-aware understanding: from Word2Vec’s elegant simplicity to BERT’s deep insight.

The Foundation: Why Words Need Representation

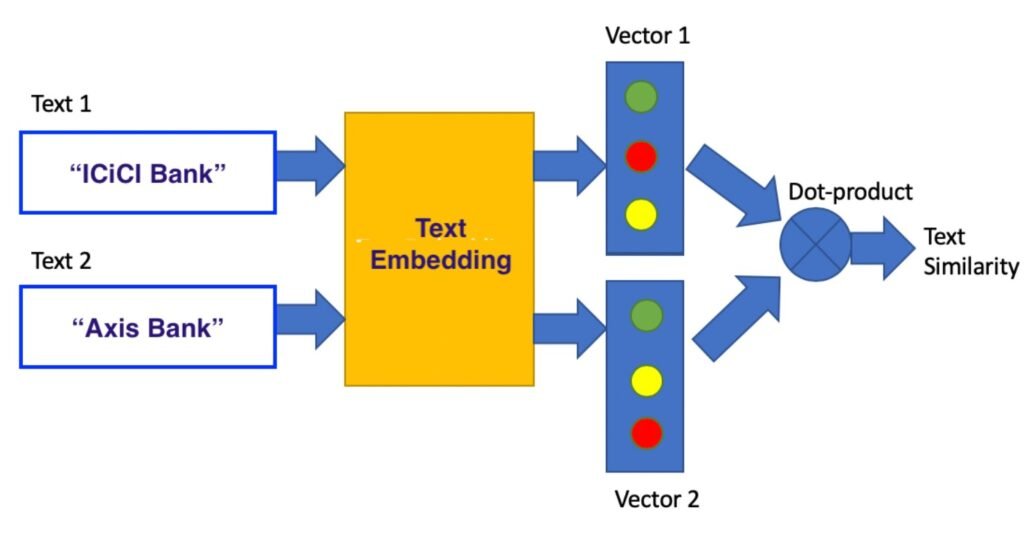

Computers cannot interpret alphabets the way humans do. They rely on numbers. A word must be translated into something numeric for a computer to handle it. But simply assigning each word an index number would make language cold and disconnected. The word river and stream would have no relationship, even though humans see them as cousins in meaning. Embeddings solve this by mapping words into a multi-dimensional space where semantic relationships appear in geometric form. Words that live close together in this space share meaning, usage, and emotional character. The city becomes a map, and meaning becomes distance, direction, and shape.

Word2Vec: The First Big Leap

Word2Vec was like giving language its first compass. Developed by researchers at Google, it used neural networks to encode words into vectors by learning which words tend to appear near each other. There were two key ideas at play:

- Skip-gram, predicting the surrounding words given a target word

- Continuous Bag of Words, predicting the word based on context

It didn’t look at full grammar. It didn’t worry about sentence structure. It simply observed how words coexist, forming clusters of meaning like neighborhoods. Words like king, queen, man, and woman settled into a space where relationships could be expressed mathematically:

king – man + woman = queen

This equation became legendary. It showed that meaning is not just symbolic but directional.

Yet Word2Vec had a limitation. Every word had one fixed vector. The word bank meant the same whether referring to a river or finance. The city map could not yet show the shifting emotional weather of language.

Beyond Static Meaning: Contextual Embeddings

Language is fluid. Words change their clothing depending on the sentence they are placed in. To solve this, newer models began treating meaning as something contextual. Instead of one definition per word, meaning was now something that could reshape itself like clay, molded by the sentence. This was a turning point in NLP research and is often explained in depth during structured certification training such as AI course in Pune, where learners observe how context-awareness dramatically improves understanding accuracy.

This shift came from deeper models using recurrent neural networks and attention-based architectures. But one model changed everything.

BERT: Understanding Language in Both Directions

Bidirectional Encoder Representations from Transformers (BERT) revolutionized embeddings by reading sentences the way humans do: not only left-to-right or right-to-left, but both ways at once. BERT observes every word in relation to all others in a sentence.

If Word2Vec gave language a compass, BERT gave it a panoramic view.

BERT relies on the Transformer architecture, which introduced the powerful mechanism of self-attention. Instead of processing text sequentially, Transformers allow models to weigh the importance of each word with respect to others. In a sentence like:

She sat by the bank and listened to the water,

BERT clearly understands that bank refers to a river’s edge.

This makes embeddings dynamic, nuanced, and deeply contextual. The meaning is not stored in the word alone but in its relationships.

The Power of Contextualized Representations

Contextual embeddings allow NLP systems to handle ambiguity, idioms, and sentiment with greater sensitivity. They are the backbone of modern applications like:

- Chatbots that respond conversationally

- Search engines that understand intent rather than keywords

- Translation systems that preserve tone and structure

- Sentiment analysis systems that detect sarcasm and emotional nuance

Where early embeddings provided the neighborhood, contextual embeddings provide the climate, mood, and moment.

Conclusion

The evolution from Word2Vec to BERT tells a story of language technology learning to listen rather than merely record. Word2Vec captures associations, but BERT learns intention. Language stopped being a set of frozen definitions and became a living landscape of meaning.

As NLP continues advancing with models even more context-aware and emotionally intelligent, the future promises systems that grasp not only what we say but why we say it. Embeddings form the bridge, letting machines not just translate words into numbers but into understanding.